What AI runs Gemini?

“What AI runs Gemini?” sounds like a trick question—because Gemini is the AI.

It’s the name Google uses for its own family of state-of-the-art models built by Google DeepMind.

What most people mean is: What powers Gemini under the hood? Which variants exist? How does it compare to other models like ChatGPT? And how do I actually get hands-on with it for text, images, and more?

This article breaks that down in plain English. We’ll cover:

- The Gemini family (Ultra, Pro, Flash, Nano—and the newer Gemini 2.5 Flash Image for image generation/editing).

- The infrastructure that runs Gemini (Google’s AI Hypercomputer on TPUs like v5p and newer inference hardware).

- Real capabilities: long context windows, multimodality, and safety watermarks like SynthID for images.

- A practical “how to use it” section (including a one-stop interface tip with Promptus for image work via “Nano Banana,” i.e., Gemini 2.5 Flash Image).

Sprinkled throughout are tips to help you move from curiosity to creation. Let’s dive in. 🏊♀️

First, what is Gemini?

Gemini is Google’s umbrella name for a multimodal AI model family—meaning it can reason across text, images, audio, and more. Over time, Google has shipped multiple tiers to address different needs:

- Ultra – the flagship capability tier for the hardest tasks.

- Pro – a strong general-purpose model (popular in APIs and the Gemini app).

- Flash – optimized for speed and cost at scale.

- Nano – distilled for on-device tasks (think mobile scenarios).

The big story is that these tiers have gained very long context windows—up to 2 million tokens in Gemini 1.5 Pro—so you can feed huge documents, repositories, or multi-asset packets and get coherent output.

That’s not just an academic spec; it unlocks real workflows like “give the model my entire product brief, style guide, and example assets” without constant chopping and pasting.

The image side: Gemini 2.5 Flash Image (a.k.a. “Nano Banana”) 🖼️➡️✨

In 2025, Google introduced Gemini 2.5 Flash Image—a state-of-the-art image generation and editing model.

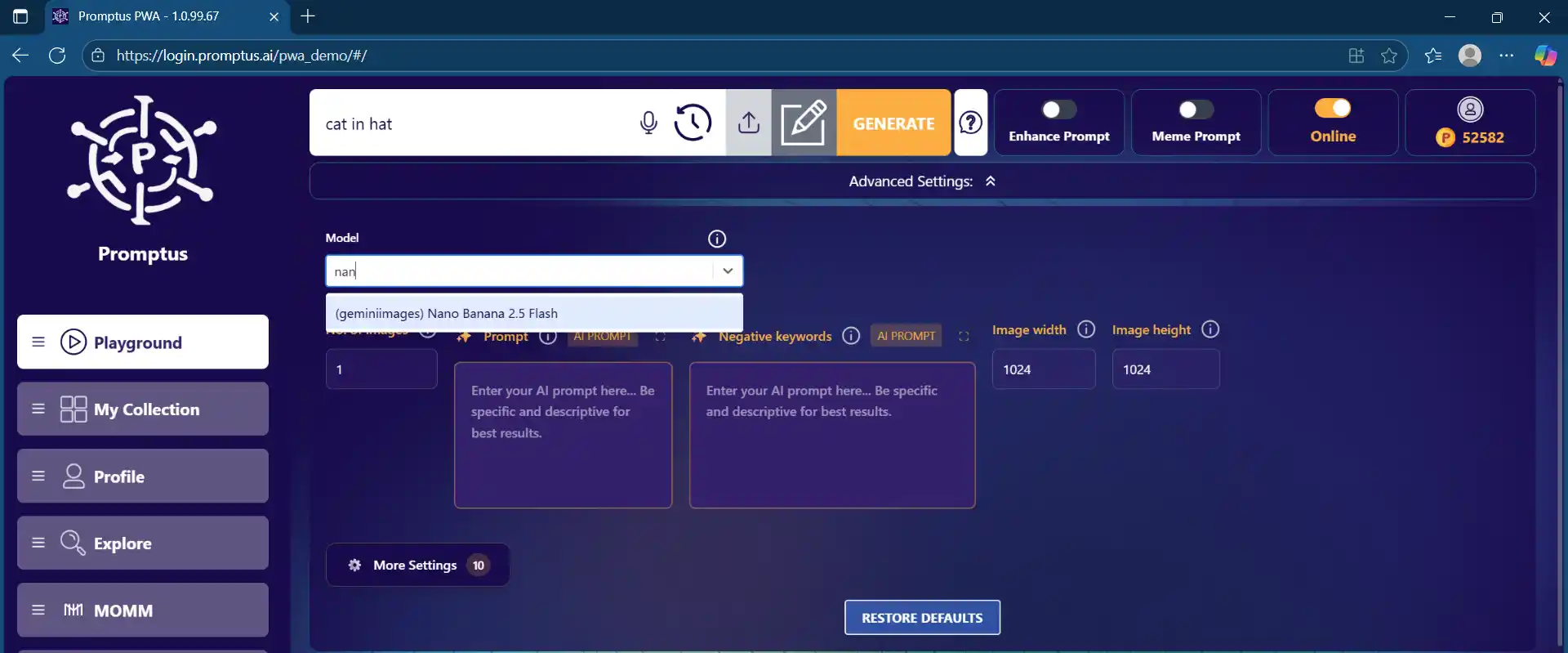

If you saw the nickname “Nano Banana,” that’s an internal codename widely referenced by the community; the official model name is Gemini 2.5 Flash Image. What’s special here?

- Prompt-based local edits: blur backgrounds, remove objects (even a person), recolor, or restyle using natural language.

- Multi-image fusion: blend multiple inputs into a single, coherent result.

- “World knowledge”: better semantic understanding for more faithful edits.

You can try it via Promptus and in Google AI Studio/Gemini API right now (model name appears as a preview) and it’s also rolling into products and partner platforms.

Side note: Google has also announced upgraded image editing directly in the Gemini app, focusing on consistent likeness for people/pets and style blending—handy for quick on-device changes.

So… what runs Gemini? (Infrastructure & performance) ⚙️

Under the hood, Gemini trains and serves on Google’s AI Hypercomputer—a vertically integrated stack combining datacenter networking, compilers, and custom silicon.

The best-known chips are TPUs (Tensor Processing Units). A key milestone here is TPU v5p, designed for training cutting-edge generative models; more recent updates focus on inference efficiency at scale. Together, this stack gives Gemini its combination of scale, speed, and cost-performance.

In 2025, Google also highlighted Ironwood TPUs for inference, tuned for high-throughput serving of large models.

That matters because latency and throughput often determine whether advanced features (like 2M-token context or multi-image fusion) feel snappy enough for production.

How Gemini compares to ChatGPT

Both Gemini and ChatGPT are front doors to families of models. While feature sets shift constantly, a few contrasts are stable:

- Context window: Gemini 1.5 Pro’s 2M tokens (now broadly available to developers) sets a high bar for “long-form” tasks; OpenAI’s upper limits are also expanding, but the exact numbers and availability vary by model/version.

- Real-world product tie-ins: Gemini shows up across Google’s ecosystem (Search, Docs/Sheets/Gmail integrations, Android features), while ChatGPT has strong platform breadth via OpenAI’s own tools and partners. (This changes frequently; always check current docs.)

- Image tooling: Gemini 2.5 Flash Image is Google’s native solution for image generation/editing, complete with SynthID watermarking. (

Rather than declare a universal “winner,” it’s better to pick the tool that best fits your task, latency, budget, and deployment surface.

How to actually use Gemini (fast start)

1. The Google route

- Gemini app (consumer): ask questions, draft, and now do image edits inline.

- Google AI Studio (developers): pick a model (e.g., Gemini 1.5 Pro or Gemini 2.5 Flash Image), prototype prompts, and export code. The 2.5 Flash Image endpoint is currently labeled preview.

- Vertex AI (cloud production): serve Gemini models with enterprise controls and MLOps workflows; Gemini 2.5 Flash Image is rolling out here too.

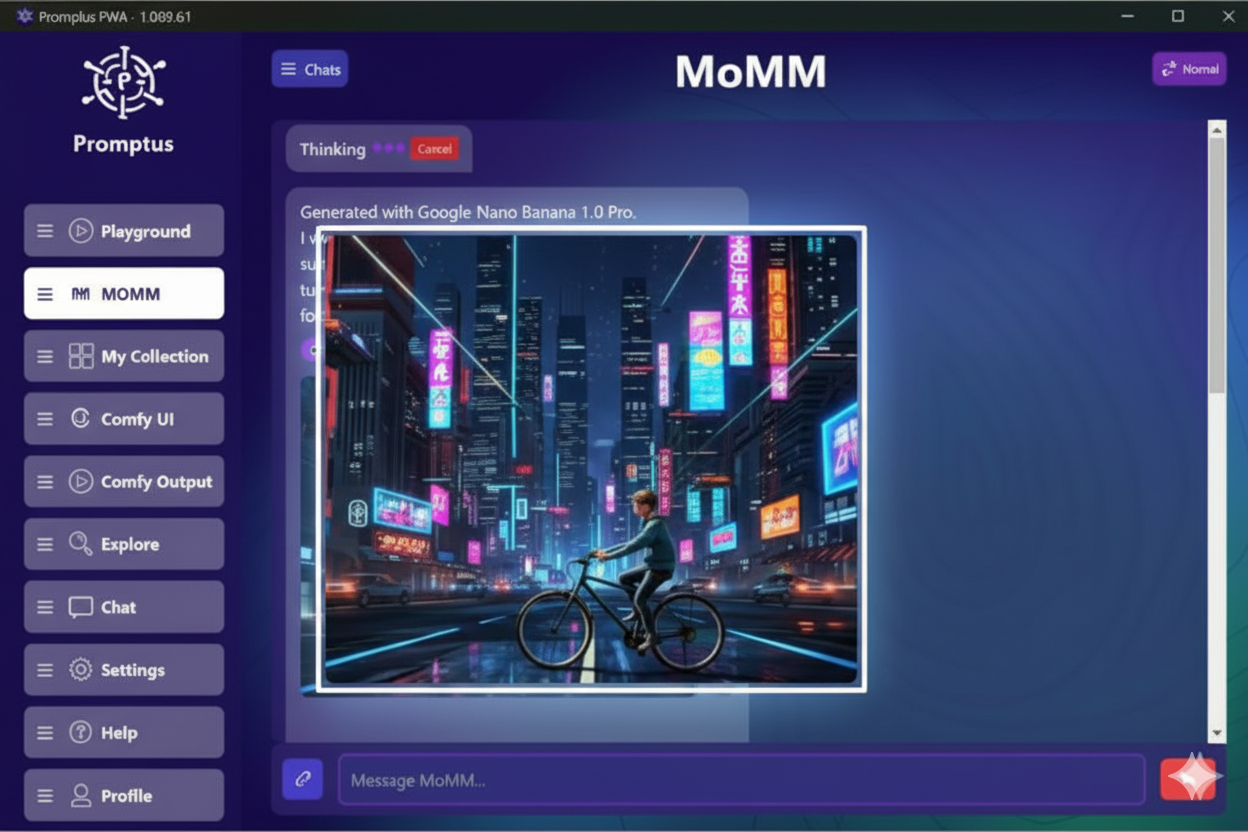

2. A one-stop creative AI agent (images ➜ video ➜ music) with Promptus 🍌🎵

If your goal is creative media with the fewest moving parts, Promptus gives you a single web interface where you can:

- Generate & edit with “Nano Banana” (the community nickname for Gemini 2.5 Flash Image).

- Organize results in Collections.

- Publish to a gallery—no exporting overhead.

- Expand an image into video and music/audio on the same platform.

In short: Promptus becomes your creative hub—model access, versioning, curation, and multi-media add-ons without tool-hopping. (Feature availability can vary by region/account; check your workspace.) (Based on the workflow you described.)

Where Gemini shines today ✨

- Long-context workflows: ingest lengthy PDFs, codebases, meeting transcripts, and style guides in one go—then ask nuanced, cross-document questions.

- Multimodal analysis: combine text + images (and more) inside one prompt for richer reasoning.

- Creative image work: fast iterations, local edits by prompt, and multi-image fusion in Gemini 2.5 Flash Image—with SynthID for responsible AI.

- Ecosystem reach: integrations across Google products and partner platforms (e.g., Promptus announcing access to Gemini 2.5 Flash Image) help you slot Gemini into existing pipelines.

Safety & provenance: why the watermarking matters 🛡️

Every image created or edited with Gemini 2.5 Flash Image carries an invisible SynthID watermark. That’s not a limitation—it’s a feature for transparency and downstream trust (think: media, education, advertising). As generative images spread, having machine-detectable signals that say “this was created or altered with AI” helps teams comply with policies and reduce misinformation risk.

Frequently asked: “Is Nano Banana the same as Gemini?”

Short answer: Nano Banana is a community-referenced codename; the official product name you’ll see in Google tooling is Gemini 2.5 Flash Image (e.g., in AI Studio / the API). So when a platform’s dropdown says “Gemini 2.5 Flash Image,” that’s the one people have been calling “Nano Banana.”

A practical mini-playbook 🧰

- Try quick edits in the Gemini app

- Upload a photo, ask: “Remove the person behind me and soften the background.”

- Great for personal moments and social posts.

- Prototype in Google AI Studio

- Create an app using Gemini 1.5 Pro for language + Gemini 2.5 Flash Image for visuals.

- Use the 2M-token context to stash brand rules and sample assets in the same session.

- Export code straight from the browser.

- Go end-to-end in Promptus

- Pick Gemini 2.5 Flash Image (the “Nano Banana” you’ve heard about).

- Generate → Save → Publish: keep outputs in Collections, share a gallery, and then animate or add audio—all without switching tools.

- Ideal for creators who value speed and cohesion in their pipeline.

- Scale on Vertex AI

- When you’re ready for production, switch to Vertex AI for security controls, quotas, monitoring, and team workflows—and use the same Gemini models.

What this means for teams 🧑💻🧠

- Product & design: Long context means product specs, user research, and design tokens can live together in a single session. Use Gemini 2.5 Flash Image to iterate product shots and marketing images quickly.

- Developers: Prototype in AI Studio, deploy via Vertex AI, target performance with Google’s TPU-powered infrastructure.

The bottom line

- Gemini isn’t “run by” some other AI—Gemini is Google’s AI.

- It’s delivered as a family (Ultra, Pro, Flash, Nano) and keeps expanding with specialized models like Gemini 2.5 Flash Image for visual creation/editing.

- It’s powered by Google’s AI Hypercomputer on TPUs optimized for both training and inference, which underpins its speed, scale, and cost profile.

- If you want the simplest creative flow for images → video → music without tool-hopping, Promptus is a strong single-workspace option—letting you generate, edit, organize, and publish in one place (and pick the “Nano Banana”/Gemini 2.5 Flash Image model when you need visuals).

Gemini’s “smartness” will keep evolving, but the direction is clear: bigger context windows, better multimodal reasoning, faster/cheaper serving, and safer outputs. That combo is why so many teams are building with it right now. ⚡

AI Image Generator for Creators

Promptus connects you to Google Gemini 2.5 Flash Image. Instantly create original art, edit photos, and design visuals with the power of AI—all inside one app.