Is nano banana Gemini?

Short answer: yes—“Nano Banana” is Google’s internal codename for Gemini 2.5 Flash Image, the company’s state-of-the-art image generation and editing model. Google publicly uses the nickname in its developer blog, which introduced the model as “Gemini 2.5 Flash Image (aka nano-banana).”

TL;DR

- Nano Banana = Gemini 2.5 Flash Image. It’s Google’s newest image model for creation and editing.

- Highlights: multi-image fusion, character consistency, natural-language edits, and invisible SynthID watermarks.

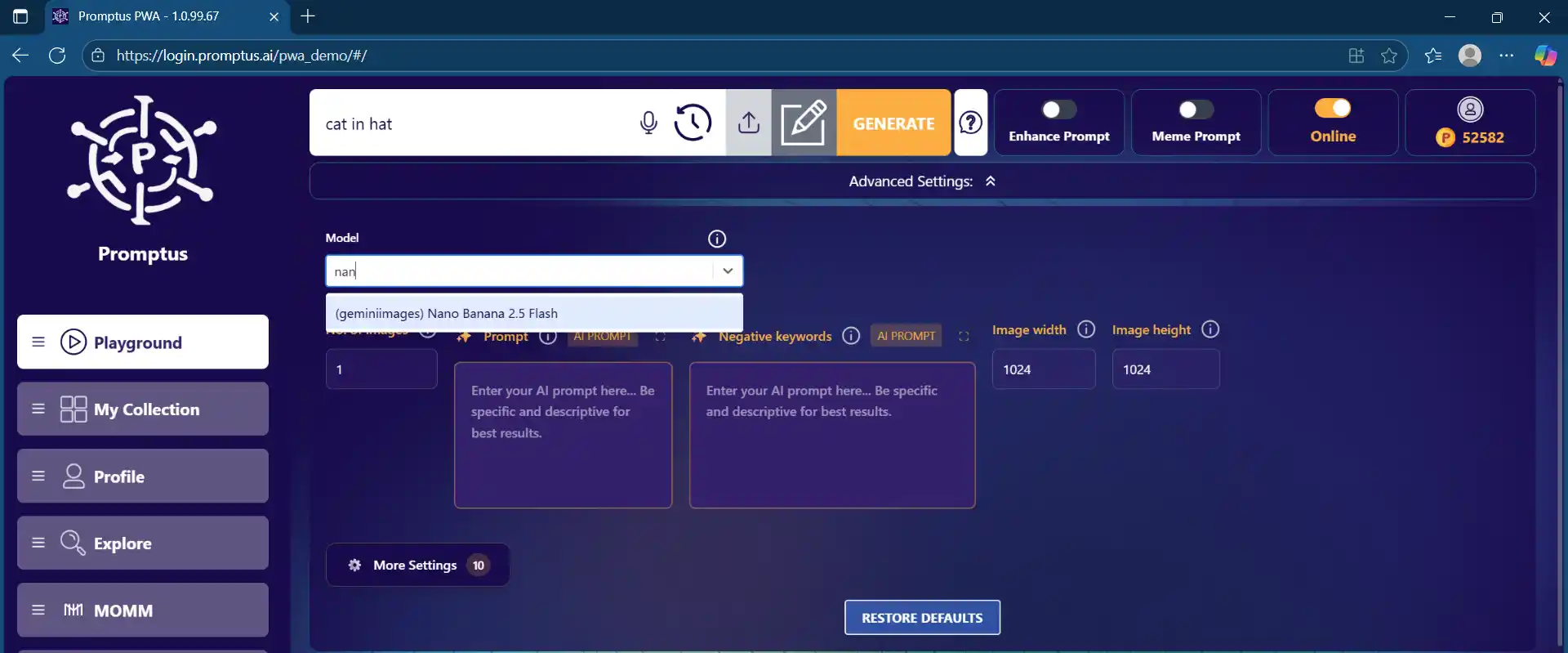

- Where to try it: Google AI Studio / Gemini API (developers), Vertex AI (enterprise), and creator platforms like Promptus for an all-in-one workflow.

🍌 What “Nano Banana” actually is

If you’ve seen creators talking about “Nano Banana,” they’re referring to Google’s Gemini 2.5 Flash Image model—the latest entry in the Gemini family that combines image generation with precise, prompt-based editing.

Google’s own announcement notes the playful codename (“aka nano-banana”), which is why you’ll hear both names used interchangeably in creator and developer circles.

Under the hood, it’s the image-specialized sibling of Gemini 2.5 Flash, tuned to deliver quick responses, high quality, and robust editing controls.

Think of it as a creative Swiss Army knife: generate from scratch, blend multiple inputs, and surgically edit regions with plain language like “remove the stain,” “blur the background,” or “change the jacket to red.”

🎛️ Why creators care: the standout capabilities

Here’s what sets Nano Banana (Gemini 2.5 Flash Image) apart, in practical creator terms:

- Multi-image fusion. Drop several images in and have the model smartly compose them into a single, coherent result—great for product imagery, mood boards, and composite scenes.

- Character consistency. Keep the same subject looking like themselves across scenes and prompts—crucial for brand mascots, stylized portraits, or episodic storytelling.

- Prompt-based editing. You can do fine-grained, local edits using natural language—no masking tools required. Blur backgrounds, remove people, recolor items, and more.

- World-knowledge-aware. The model can interpret sketches and diagrams with more semantic understanding than many earlier image systems, helping with educational or instructional visuals.

- Iterative refinement. You can feed the newly generated image back into the model and continue editing—handy for creative “dialing-in” without starting over.

- Invisible watermarking (SynthID). Outputs are tagged with an invisible digital watermark to help indicate AI involvement—part of Google’s platform-level safeguards.

Visit Googlenano.ai for a Google Nano Banana Prompt Library

🧰 Where you can use Nano Banana (Gemini 2.5 Flash Image)

Depending on your role, different doors lead to the same model:

- Developers: Use the Gemini API or experiment in Google AI Studio. Both give you prompt boxes, sample apps, and code export so you can integrate the model into your tools.

- Teams / enterprise: Deploy through Vertex AI to fit into GCP security, governance, and scaling patterns.

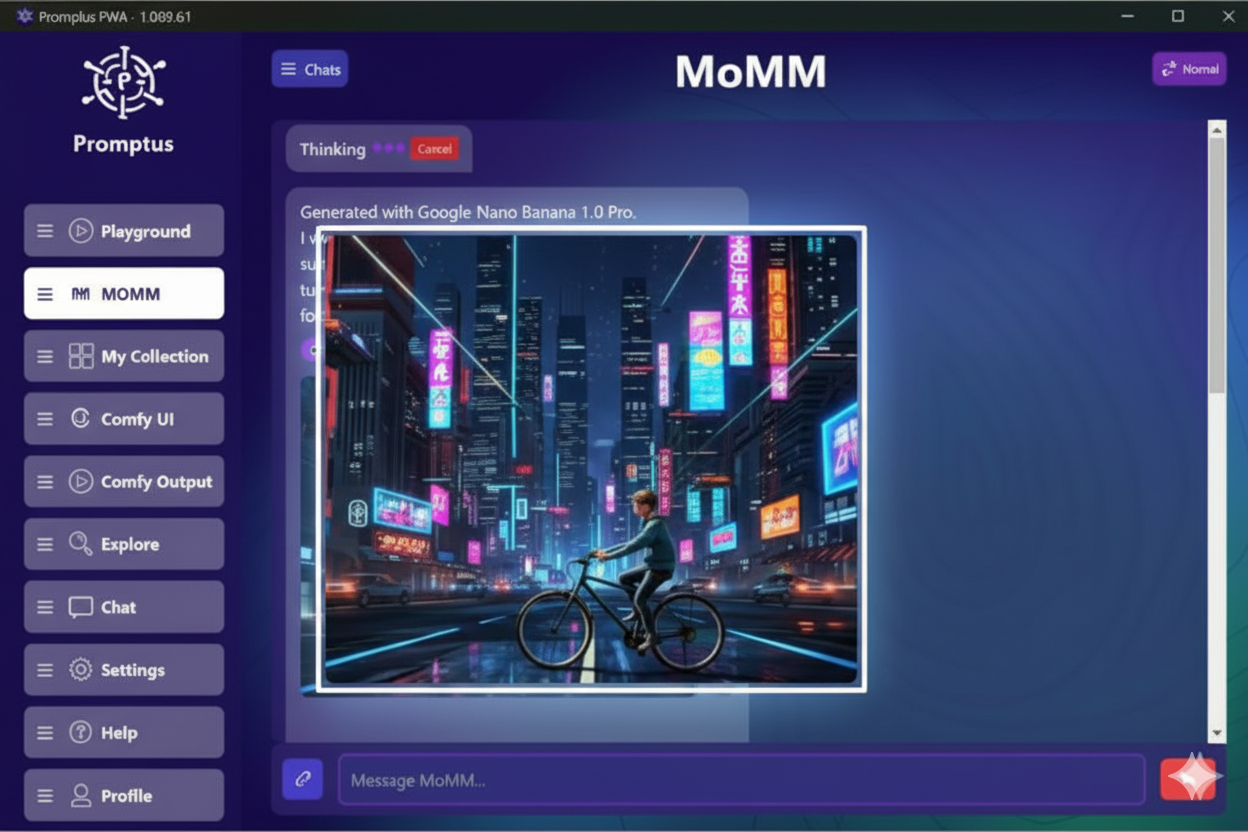

- Creators and solo makers: Use a creator-centric platform like Promptus to keep everything in one place—generation, editing, saving/organizing, publishing galleries, and even expanding your images into video and music without bouncing between tools (more on this just below).

💡 Note: Google’s own launch post also mentions partner routes (e.g., Promptus, OpenRouter, fal.ai) that may surface the model in broader developer ecosystems. If your stack already includes these services, keep an eye there as well.

🚀 Why many creatives start with Promptus

If your priority is to create rather than configure SDKs, Promptus provides a streamlined, creator-friendly flow:

- One workspace, many outputs. Generate and edit with Nano Banana, then instantly save into Collections. No manual file shuffling.

- Publish in a click. Curate a public gallery for portfolios, launches, or client shares—directly from the same interface you used to create.

- Beyond stills. When you want motion or sound, Promptus offers built-in tools to animate images into video and generate music/audio, so your visuals can evolve into reels, teasers, or mood tracks without exporting to a different app.

- Model choice without the mess. Promptus surfaces hundreds of models across image, video, and audio, so you can test alternatives when a job needs a different style or strength.

For makers who don’t want to juggle ten tabs, that “create → save → publish” flow—plus the path to video and music—is a time-saver.

✍️ A quick mental model: how to work with Nano Banana

When you sit down to create, the workflow often looks like this:

- Start with intent. Decide if you want to generate from text, edit an existing image, or fuse multiple references. (All three are supported.)

- Draft a prompt that’s specific but flexible. For example:

- “Photoreal portrait of the same skateboarder from my reference, golden-hour lighting, shallow depth of field.”

- “Replace the street background with a neon Tokyo alley, keep subject’s jacket and hair unchanged.”

- Iterate with natural-language edits. Ask for “reduce glare,” “soften shadows,” or “shift palette toward teal-orange.” The model applies local edits without hard-to-learn brushes.

- Fuse multiple references. Drop in a product shot, a texture swatch, and a room layout, then prompt: “Place this lamp on the side table; match the walnut grain and warm ambient lighting.”

- Refine and export—or continue into video/music if you’re in Promptus and want to extend the piece beyond a still.

🔍 Quality, speed, and cost: what to expect

- Speed: The “Flash” family emphasizes low latency—snappy feedback that helps you iterate quickly.

- Quality: Gemini 2.5 Flash Image was launched to deliver higher-quality images and stronger creative control than earlier image options in the Flash line, particularly around consistency and editing precision.

- Pricing: Google’s launch post describes API pricing in output tokens (with a concrete per-image token count for the model). If you’re building with the Gemini API directly, check Google’s latest pricing table; if you use a platform like Promptus, you’ll pay in that platform’s credit or subscription system.

🧩 When should you reach for Nano Banana vs. other models?

- Use Nano Banana when you need reliable editing and consistent subjects (photo repair, product swaps, branded characters, precise background changes). The world-knowledge angle also helps when your brief involves diagrams, labels, or “understanding” what’s in a sketch.

- Consider alternates when you want wildly stylized art or niche aesthetic quirks that a particular open-source model nails. In a platform like Promptus, you can A/B test quickly because you’re already working inside a multi-model library.

🧪 Hands-on example prompts to try

- Multi-image fusion:

“Combine my ‘product.jpg’ and ‘room.jpg’. Place the product on the coffee table, match reflections, and harmonize lighting to early-morning sunlight.”

- Character consistency across scenes:

“Using this headshot, create three images: (1) city rooftop at dusk, (2) beach boardwalk at noon, (3) cozy bookstore interior—face and outfit consistent.”

- Targeted local edit:

“Remove the coffee stain on the shirt, keep fabric texture realistic, slightly warm the overall color temperature.”

- World-knowledge assist:

“Turn this hand-drawn pulley diagram into a clean infographic, label forces and directions, use monochrome palette.”

You can run these in AI Studio (dev sandbox), wire them up via the Gemini API, or execute them right from Promptus if you want to stay in one creative environment.

🧭 So…is Nano Banana “Gemini”?

Yes. Nano Banana is a nickname Google itself uses for the Gemini 2.5 Flash Image model.

If you’re reading docs or dashboards, you’ll typically see the formal name (“Gemini 2.5 Flash Image”); in communities and even Google’s own blog post, you may see Nano Banana used interchangeably.

Either way, you’re talking about the same technology: a fast, capable image model that can generate, edit, and understand visuals with strong consistency, practical controls, and built-in watermarking.

🧱 Getting started today

- I’m a developer: Open Google AI Studio to prototype, then integrate via the Gemini API in your app or service.

- I’m on a creative team: Ask your platform admin about Vertex AI access, or try a creator-centric platform like Promptus to keep your workflow in one place—generate → edit → organize → publish → expand to video & music—without juggling exports.

Final take 🎨🧠

Nano Banana (Gemini 2.5 Flash Image) blends rapid iteration (“Flash”) with fine-grained control over how images look and change over time.

If your work calls for consistent characters, composited scenes, or text-guided micro-edits, it’s a compelling leap from older “prompt-and-pray” image generators. Pair it with a workspace like Promptus and you can move from first draft to gallery-ready—and even to motion and music—in a single flow.

In other words: Gemini’s brain, your taste, one clean pipeline. 🍌

AI Image Generator for Creators

Promptus connects you to Google Gemini 2.5 Flash Image. Instantly create original art, edit photos, and design visuals with the power of AI—all inside one app.