How smart is Gemini AI?

Introduction

“How smart is Gemini AI?” sounds like a single question, but it’s really three:

- Which Gemini are we talking about? Google now uses “Gemini” to refer to a family of models. There are powerful cloud models (e.g., Gemini 1.5 Pro/Flash and the image-focused Gemini 2.5 Flash Image) and an on-device model called Gemini Nano that runs locally on Android.

- What does “smart” mean? In practice, “smart” involves several dimensions: how much context a model can handle, how well it reasons across text + images + audio, how current and grounded its answers are, how safe and reliable its outputs are, and how well it integrates with tools you already use.

- How does Gemini compare to ChatGPT? Useful comparisons focus on context window, real-time grounding, multimodality, speed/latency, tooling & integrations, and where computation happens (cloud vs on-device). For example, Gemini’s developer platform can connect directly to Google Search for real-time grounding and citations, while OpenAI’s newest GPT-4.1 pushes long-context performance up to 1 million tokens as well.

This article breaks down each dimension with simple tests you can try and ends with practical guidance—when to pick Gemini’s cloud models, when Gemini Nano shines, and where Promptus fits if you want a quick way to do creative image work with Gemini 2.5 Flash Image (often nicknamed “Nano Banana” informally).

What “smart” looks like in day-to-day use

1) Long-context understanding

Gemini’s developer docs and launch blog introduced extremely large context windows, with models in the Gemini 1.5 family reaching 1M-token contexts (and more in specific configurations). Long context matters for tasks like reviewing lengthy PDFs, reasoning over codebases, or analyzing timelines across dozens of documents. (blog.google, Google AI for Developers)

On the OpenAI side, GPT-4.1 (released April 2025) explicitly targets long-context reliability and claims robust performance up to 1M tokens, surpassing GPT-4o’s earlier 128K limit reported across press and system materials. In other words, both ecosystems now treat “bring your whole project” as a baseline use case rather than a parlor trick. (OpenAI, The Verge)

What to try: paste a long, messy spec (dozens of pages), then ask the model to map decision points to source paragraphs and generate a risk register with citations back to the input. If it can do this and recover the right passages, you’re seeing genuine long-context competence—not just glib summaries.

2) Grounded, up-to-date answers

For tasks that require fresh facts, Gemini’s API and AI Studio offer Grounding with Google Search. You can tell Gemini to look things up, cite sources, and return “Search Suggestions” that link to the exact SERP used. This reduces hallucinations for current-events questions, and it’s a major practical axis of “smartness.” (Google AI for Developers, Google Developers Blog)

What to try: ask a question with a moving target (e.g., “What changed in last night’s security bulletin?”). With grounding enabled, evaluate whether it links to authoritative sources and reflects post-cutoff updates.

3) Multimodal reasoning (images, audio, text together)

“Smart” increasingly means multimodal: understanding and generating across text, image, audio, and sometimes video. Google’s Gemini 2.5 Flash Image specializes in image creation and editing with multi-image fusion, style transfer, and natural-language edits (“remove the stain,” “blur the background,” “keep the same person”). Because it’s built on Gemini’s world knowledge, it tends to read scenes and diagrams with better semantic fidelity than classic text-to-image systems. (Google Developers Blog, Google DeepMind)

On the safety front, Google DeepMind’s SynthID adds an invisible watermark to AI-generated images to help with responsible attribution—a pragmatic piece of “smartness” that shows up after the model speaks, not just while it’s thinking. (Google DeepMind)

What to try: give the model two product shots and an empty living-room photo; tell it to place and scale the product, match shadows, and then nudge color temperature warmer—without changing the subject’s identity. You’re testing consistency, scene understanding, and adherence to instructions across multiple images.

4) On-device intelligence vs. cloud: Gemini Nano

Another kind of “smart” is being useful in the right place. Gemini Nano runs on-device inside Android’s AICore system service for fast, private tasks—like summarizing a long Recorder audio file or offering Smart Reply suggestions—without sending sensitive content to the cloud. Devs target Nano through ML Kit GenAI or the experimental AI Edge SDK; end users mostly just see features “turn on” as phones update. This local capability broadens what we mean by intelligence: not only what a model can do but where and under which privacy constraints it can do it. (Android Developers)

What to try: Put your phone in airplane mode, record a memo in Recorder, and ask for a summary. If it works offline, that’s Nano quietly doing the job.

5) Integrations and workflow

It’s easy to underrate integrations—until you’re on a deadline. Gemini is tightly connected to Google Workspace (Docs, Sheets, Gmail) and shows up in Google’s developer stack (AI Studio, Vertex AI). Meanwhile, OpenAI’s models are embedded across the ChatGPT product line and API ecosystem, with rapid iterations like GPT-4.1 that target agents, coding, and long-context reliability. The point isn’t “who integrates more,” but whether the model meets you inside your existing tools and deployment realities (browser, mobile, enterprise security, data governance, and so on). (Google Developers Blog, OpenAI)

Benchmarks vs. reality

Public benchmarks (e.g., MMLU, MMMU, GPQA) can be useful, but they only capture slices of “smart.” Scores also move quickly as models update and vendors adjust training or tokenization. That’s why both Google and OpenAI now emphasize real-world diagnostics like long-context retrieval fidelity, tool-use reliability, and grounded citation quality rather than leaderboard wins alone. If your work depends on correctness, build task-specific evals: small unit tests for your prompts, regression checks for new app versions, and red-team lists of failure modes that matter to you (e.g., mixing up currencies or mislabeling a medical code). (OpenAI)

Speed, latency, and cost

Perceived intelligence collapses if you’re waiting. In practice, on-device Nano wins for low-latency microlabeling and short-form summarization; cloud models win when the task demands big context, heavy reasoning, or image synthesis.

If you’re cost-sensitive, you can route simpler turns to smaller/cheaper models, keep complex turns on the bigger models, and cache intermediate artifacts so users don’t pay for repeats.

On the OpenAI side, GPT-4.1 aims to outperform GPT-4o on long-context while improving cost efficiency; on the Google side, Gemini 1.5 families include Flash tiers optimized for speed.

Creative work: where Promptus simplifies Gemini image workflows

If your definition of “smart” includes getting things done with the fewest moving parts, a practical path for creative media is to use Promptus as your single browser workspace:

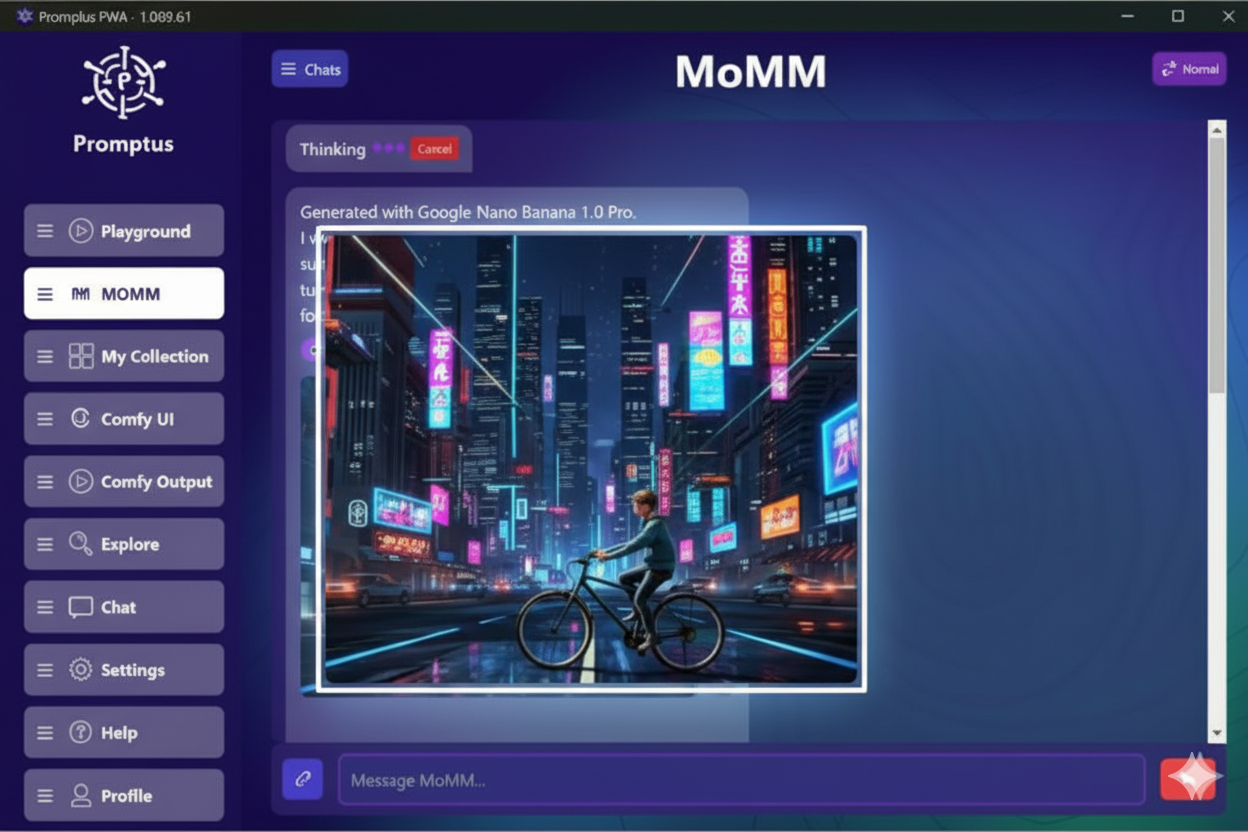

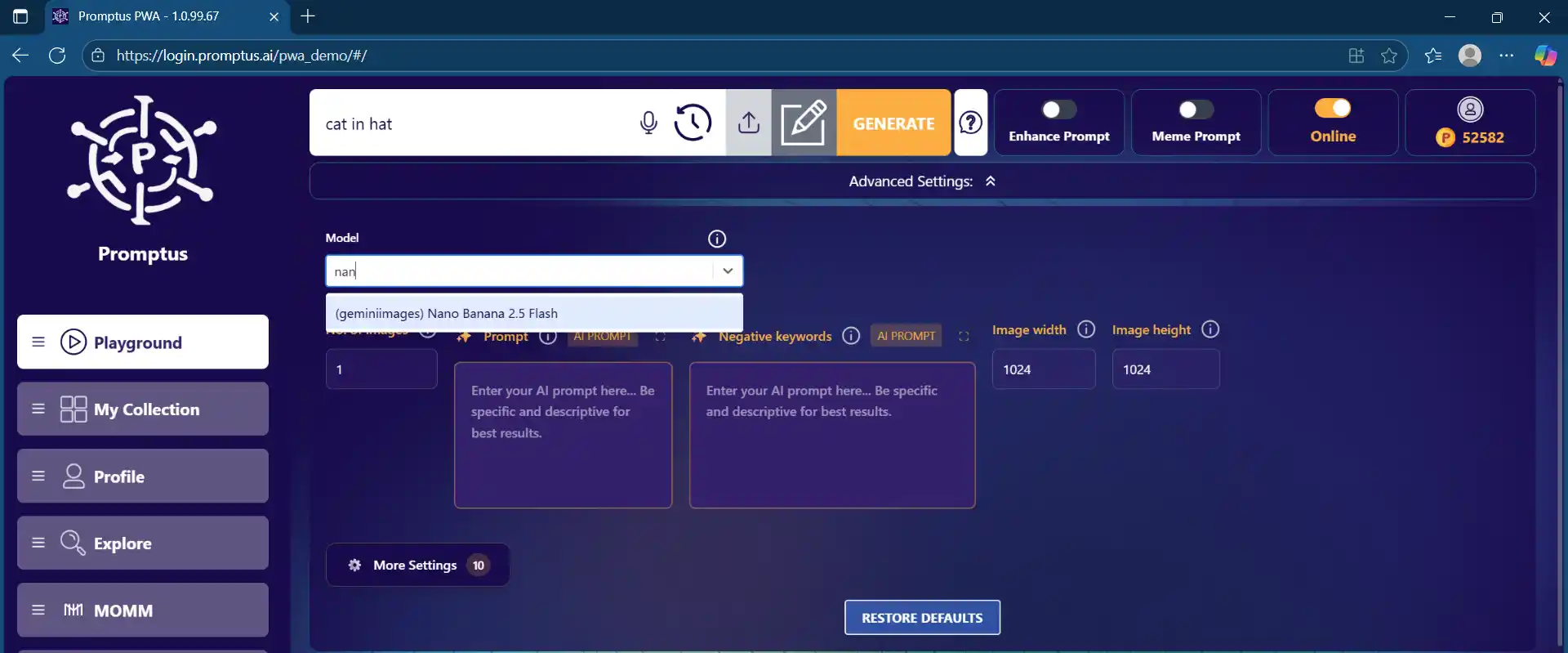

- Model access inside one UI: Choose Gemini 2.5 Flash Image (the model often nicknamed “Nano Banana”) from a model selector and generate or edit without leaving the page.

- Natural-language edits, multi-image fusion, and style transfer: Describe the change you want in plain English; blend multiple photos; keep a character consistent across scenes. Save the best takes into Collections and share them as a public gallery.

- Go beyond stills: When your image is ready, continue in the same workspace to turn visuals into short videos or pair them with music/audio—handy for social cut-downs and mood boards. (Functionality varies by platform; always check current feature lists.)

Because Promptus is a single web interface, you avoid juggling exports between tools; you can generate → edit → organize → publish from one place. For many teams, that’s the most “intelligent” workflow: less context-switching, fewer brittle hand-offs.

Which Gemini to Use

Use this quick guide to map needs to models:

I need current, source-linked answers about the real world,

Use Gemini with Grounding in AI Studio or the Gemini API (enable Google Search grounding), or use the Gemini app’s grounded modes where available. You’ll get fresher, better-sourced responses than ungrounded chat.

I need to analyze giant documents or codebases.

Reach for Gemini 1.5 (long-context) models or OpenAI’s GPT-4.1. Both ecosystems now support very large contexts (up to ~1M tokens) with improved retrieval and reasoning under load. Test your own prompts.

I need private, instant help on my phone.

Use Gemini Nano features (e.g., Recorder summaries). They run on-device inside AICore, which improves privacy and responsiveness for short tasks.

I need high-quality image edits or consistent character art.

Use Gemini 2.5 Flash Image. For the simplest experience—model access + saving + publishing + multimedia—do it in Promptus.

I need provenance on images and safer sharing.

Favor outputs with SynthID invisible watermarking so collaborators can verify the origin later.

Practical experiments you can run today

- Grounded research memo

Ask Gemini (with Search grounding enabled) for a 3-paragraph brief on a rapidly changing topic, with sources, then click through the links. If the citations line up, that’s real “smartness,” not just eloquence.

- Long-context audit

Drop a 200-page manual into a long-context model. Ask for a change log and Open Questions list with section citations. This tests memory and retrieval rather than style.

- On-device privacy check

Use Recorder → Summarize offline to validate that Nano is actually doing inference locally.

- Creative consistency

In Promptus, load Gemini 2.5 Flash Image, feed two photos of the same person, and instruct: “Fuse these into a travel postcard; keep the person’s face consistent; match lighting to golden hour.” Evaluate identity consistency and scene coherence.

So… how smart is Gemini?

Short answer: Smart enough to be useful in different ways across cloud and device.

- In the cloud, Gemini’s strengths are long-context reasoning, multimodal understanding, and grounded answers via Google Search, paired with creative media tools like Gemini 2.5 Flash Image for natural-language edits and multi-image fusion.

- On device, Gemini Nano makes phones feel natively intelligent—fast summaries, contextual suggestions, and accessibility features that work even when you’re offline.

Long answer: “Smart” depends on your definition. If you care about freshness and citations, enable grounding and judge the sources. If you care about modal breadth, give the model tasks that mix text and images and measure adherence to constraints. If you care about privacy and latency, try on-device Nano features.

And if your work is creative, tools like Promptus let you generate, edit, organize, and publish with minimal friction—turning smart capability into a smart workflow.

AI Image Generator for Creators

Promptus connects you to Google Gemini 2.5 Flash Image. Instantly create original art, edit photos, and design visuals with the power of AI—all inside one app.